Zero Trust Commandment: Thou Shalt Consistently Tag Thy Data

October 14, 2025

How Continuous Monitoring and Validation Actually Work in Governance, Risk, and Compliance (GRC) Tools

October 27, 2025Go Beyond the Training Data and Build Dynamic AI Applications with LangChain

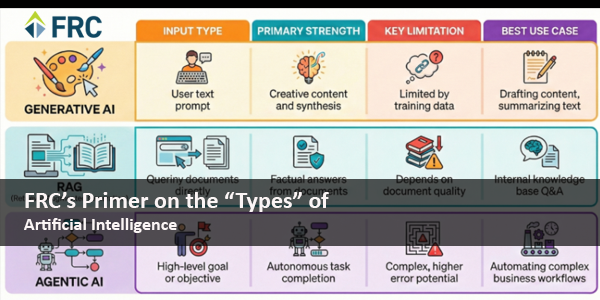

Large Language Models (LLMs) have transformed how we interact with information, offering unprecedented capabilities in understanding and generating human-like text. However, a common challenge arises when these powerful models are applied to specific, domain-specific tasks: their knowledge is limited to the data they were trained on. This means an LLM, no matter how advanced, cannot inherently answer questions about your company’s latest internal policy document, a recently published research paper, or proprietary sales data. This is where Retrieval-Augmented Generation (RAG) becomes indispensable, and frameworks like LangChain provide the robust toolkit to implement it.

The LLM Knowledge Gap: Why RAG?

At their core, LLMs are predictive engines. They excel at identifying patterns and generating text that aligns with the vast datasets they’ve ingested. This training process, however, creates a static knowledge base. If information is not part of their training corpus, they simply won’t know about it. Furthermore, when faced with a query outside their knowledge, LLMs can sometimes “hallucinate,” generating plausible but factually incorrect responses. This limitation makes them unsuitable for applications requiring precise, up-to-date, or proprietary information without an additional mechanism.

Retrieval-Augmented Generation (RAG) directly addresses this knowledge gap. Instead of relying solely on the LLM’s pre-trained knowledge, RAG introduces a retrieval step. When a user poses a question, the RAG system first retrieves relevant information from a designated knowledge base like your specific documents, databases, or any other data source. This retrieved information is then provided to the LLM as additional context alongside the user’s original query, allowing the model to generate a response that is grounded in the provided facts.

Consider an analogy: imagine a brilliant academic who has read countless books (the LLM’s training data). If you ask this academic a question about a very specific, recent development not yet in any published book, they might speculate or admit they don’t know. Now, imagine you hand them a relevant, up-to-the-minute report on that development before they answer. Their response will be informed, accurate, and specific to the document you provided. RAG functions in a similar manner, ensuring the LLM’s response is both intelligent and factually anchored to your data.

LangChain: The Orchestrator of AI Applications

Implementing a RAG system involves several distinct steps, from ingesting data to retrieving relevant chunks and formulating a prompt for the LLM. While each step can be handled individually, coordinating them into a seamless, efficient workflow can be complex. This is where LangChain, an open-source framework, demonstrates its value.

LangChain acts as an orchestration layer, simplifying the development of applications that leverage LLMs. Its name, “LangChain,” reflects its core philosophy: enabling developers to chain together various components and tools to build sophisticated AI workflows. For RAG, LangChain provides a structured, modular approach, abstracting away much of the underlying complexity and allowing developers to focus on the application’s logic.

Let’s break down the key LangChain components crucial for building a RAG pipeline:

- Document Loaders:

- Function: The first step in any RAG system is to ingest your data. LangChain provides a wide array of Document Loaders that can read information from places like PDFs, web pages, Notion databases, Google Drive, SQL databases, and more. They convert raw data into a standardized Document format, typically comprising content and metadata.

- Analogy: Think of document loaders as the initial data acquisition team. They are responsible for gathering all the necessary books and papers from various archives and bringing them into a central library.

- Text Splitters:

- Function: Once documents are loaded, they are often too large to be directly passed to an LLM, which typically has a token limit for its input. TextSplitters divide large documents into smaller, semantically meaningful “chunks.” This process is critical for efficient retrieval, as we want to retrieve only the most relevant portions of text, not entire multi-page documents.

- Analogy: If document loaders are the data acquisition team, text splitters are like a meticulous librarian. They break down long texts (books) into manageable sections (chapters or paragraphs) to make it easier to find specific information without needing to read the entire volume.

- Embeddings and Vector Stores:

- Function: To enable semantic search (finding information based on meaning rather than just keywords) we need to convert our text chunks into numerical representations called embeddings. An embedding model transforms text into a high-dimensional vector. These vectors are then stored in a Vector Store (e.g., Chroma, FAISS, Pinecone). When a user poses a query, that query is also converted into an embedding, and the vector store efficiently finds the most similar text chunk embeddings.

- Analogy: Embeddings are like creating a detailed conceptual index for each section of a book, where similar concepts are grouped numerically. The vector store is the sophisticated card catalog that uses this conceptual index, allowing you to find chapters that discuss “quantum physics” even if your query uses a different phrase like “subatomic mechanics.”

- Retrievers:

- Function: Retrievers are a core LangChain component that handles the actual search within your vector store. Given a user’s input, the retriever queries the vector store (using the query’s embedding) to fetch the most relevant text chunks. These chunks are then passed along in the pipeline. LangChain offers various retriever types, from basic similarity search to more advanced contextual compression or ensemble retrievers.

- Analogy: If the vector store is the smart card catalog, the retriever is the expert reference librarian. When you ask a question, this librarian knows exactly how to navigate the catalog to pull out the most pertinent sections of text that directly answer your query.

- Chains (LCEL) and Agents:

- Function: Finally, LangChain’s Chains (and the newer LangChain Expression Language, LCEL) allow you to define the sequence of operations: take the user query, pass it to the retriever, combine the retrieved documents with the original query into a prompt, send the prompt to an LLM, and get a final response. This sequential execution is what forms the “chain.” More complex Agents can even decide which tools to use based on the input, dynamically executing multiple chains.

- Analogy: This is the conductor of the orchestra. It takes your initial request, directs the reference librarian to find the relevant sections, packages those sections and your question neatly, hands it to the brilliant academic, and then delivers the academic’s well-informed answer back to you.

Real-World Impact and Future Applications

The power of RAG, particularly when implemented with a framework like LangChain, extends to a multitude of real-world scenarios:

- Enterprise Knowledge Bots: Companies can build internal chatbots that answer employee questions based on vast internal documentation, HR policies, IT guides, and project specifications, ensuring consistent and accurate information.

- Customer Support Enhancements: RAG-powered systems can provide immediate, accurate answers to customer queries drawn directly from product manuals, FAQs, and support articles, improving customer satisfaction and reducing agent workload.

- Research and Development: Researchers can query extensive databases of scientific papers, patents, or clinical trial data to quickly find relevant information and synthesize insights.

- Legal and Compliance: Law and compliance departments can leverage RAG to navigate complex legal texts and regulatory documents, ensuring adherence to guidelines and providing informed advice.

Conclusion

Retrieval-Augmented Generation represents a fundamental architectural pattern in modern AI application development, bridging the gap between an LLM’s general knowledge and your specific data requirements. LangChain democratizes access to this powerful technique by providing a modular, extensible framework that simplifies the construction of sophisticated RAG pipelines. For developers venturing into AI application development, mastering RAG with LangChain is not just about building a feature, it’s about building intelligent systems that are grounded in reality, accurate, and truly useful. This capability is pivotal for unlocking the next generation of AI applications that are precise, dynamic, and contextually aware.